Hidden Markov Models

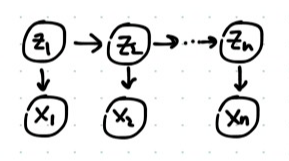

A Hidden Markov Model (HMM) consists of a set of observed variables $X_1,…,X_n \in {1,…,m} $ and hidden variables $Z_1,…,Z_n \in {1,…,m}$. The observed variables $X_k$ can be discrete, real, anything and the hidden variables $Z_k$ are discrete. The HMM is represented by the following graphical model, called a Trellis diagram:

The joint distribution represented by this graphical models is:

Parameters of HMM

The parameters of an HMM are the probabilities in the joint distribution above. We introduce some notation for these probabilities and give them names, but remember that they are just densities (or PMFs in discrete case).

- Transition probabilities: $T(i,j) = p(Z_{k+1}=j \vert Z_k=i) \hspace{10mm} (i,j \in {1,…,m}) $. Notice that these probabilities form a transition matrix.

- Emission probabilities: $\varepsilon_i(x) = p(X \vert Z_k=i) \hspace{10mm} (i \in {1,…,m}) $

- Initial distribution: $\pi(i) = p(Z_1=i) \hspace{10mm} i \in {1,…,m }$

With these abbreviations, we rewrite the joing density:

The $\varepsilon$s are pretty much arbitrary; the structure of the graph is what gives the model power.